Creating Virtuoso

Introducing Virtuoso, our experiment that allows you to listen and interact with the Library’s sheet music collection. Over 300 examples from the 19th century have been digitised and are available on our collection website. While you can see every detail of the sheet music in the collection image viewer, Virtuoso brings to life the most important part — the music.

This blog post documents how we built Virtuoso, from the music notation research and the transcription process, to our application approach and finally the musical output.

The entire application is available as open source here: https://github.com/slnsw/dxlab-virtuoso.

Music Notation

Seventh-century scholar Isidore of Seville said:

‘Unless sounds are held by the memory of man, they perish, because they cannot be written down.’

This desire to write down music has yielded a myriad of techniques over the millennia, from Babylonian cuneiform tablets of 1400 BC, to ‘neumes’ of the 9th century, to Guido’s hexachords which eventually became the familiar Do Re Mi Fa Sol La Ti.

In China, bells dating back to hundreds of years BC have been found inscribed with detailed information about pitch, scale and transposition. While in the 15th century, Sejong the Great of Korea developed the Jeongganbo technique of representing rhythm, pitch and time, using symbols in a grid of squares. In Indonesia, gamelan and drum music has been notated using several staff-like techniques, including dots and squiggles on a horizontal set of lines and a vertical ladder-like staff for recording the flexibility of percussive performances.

But when we think of music notation today, we generally think of the modern 5-line staff with its stemmed oval-shaped notes, time signatures, sharps, flats and so on. Much of the sheet music in the Library’s collection is of this type, and that is what we sought to investigate.

For many of us however, sheet music is incomprehensible, making it very hard to imagine what the music actually sounds like. So we set about building a system that would allow users to hear our sheet music, in the web browser.

ABC Notation

Modern music notation, as it has developed over the past few centuries, is a highly visual, detailed technique for encapsulating musical information. We researched various ways to represent music notation for our application and found that ABC Notation to be most suitable. This widely used text-based notation system could handle complex pieces of music, as well as being highly transportable and human-readable.

Most importantly, there is a modern open-source Javascript library, ABCjs that neatly renders ABC notation into nice-looking SVG images of the staves, while being flexible enough to connect the sheet music to sound.

When it comes to playing audio in the browser, our DX Lab’s Tech Lead, Kaho, was already working an open-source web audio project called Reactronica in his spare time, so it made sense to try and use it in a real-world situation.

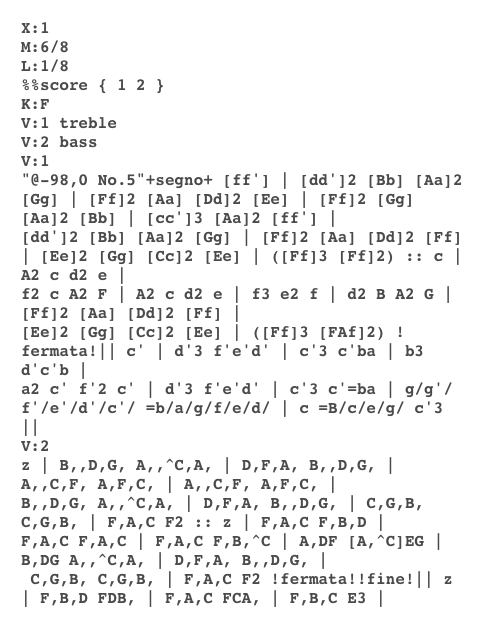

This is a simplified representation of how Virtuoso works:

The sheet music is transcribed as ABC notation, which is then fed into ABCjs. It creates the rendered SVG graphics of the staves, and via our custom code, it passes note information to Reactronica which plays those notes in your browser.

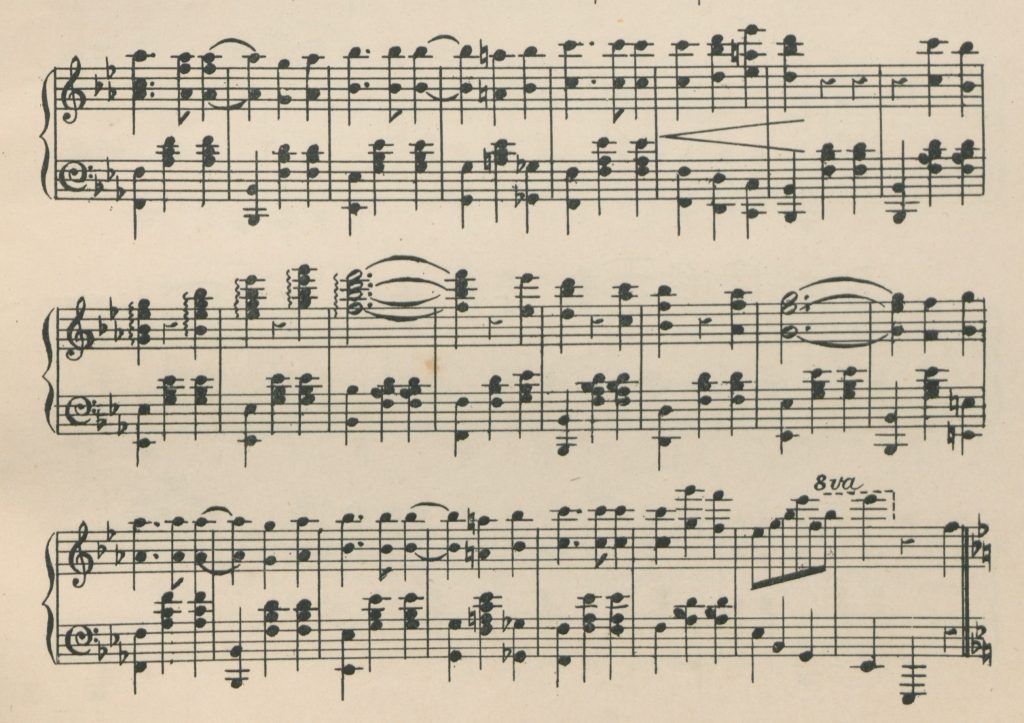

Transcription

Initially we transcribed the first song into ABC notation by hand. There were definitely several steep learning curves here, both in terms of learning ABC notation and brushing up on (and learning new things about) musical notation. Consequently the process was rather slow. One problem was not knowing what a certain symbol or marking on the sheet music was called, and thus not being able to look up how to annotate it in ABC. This list of musical symbols was extremely useful and great to cross reference with this ABC notation cheat sheet.

Despite that, numerous issues came up that meant we had to improvise, approximate or leave things out. Overall we wanted two things: the rendered SVG graphics to look as close to the sheet music as possible, and for the resulting audio to be as correct as possible.

Here some examples of where that wasn’t possible, and why.

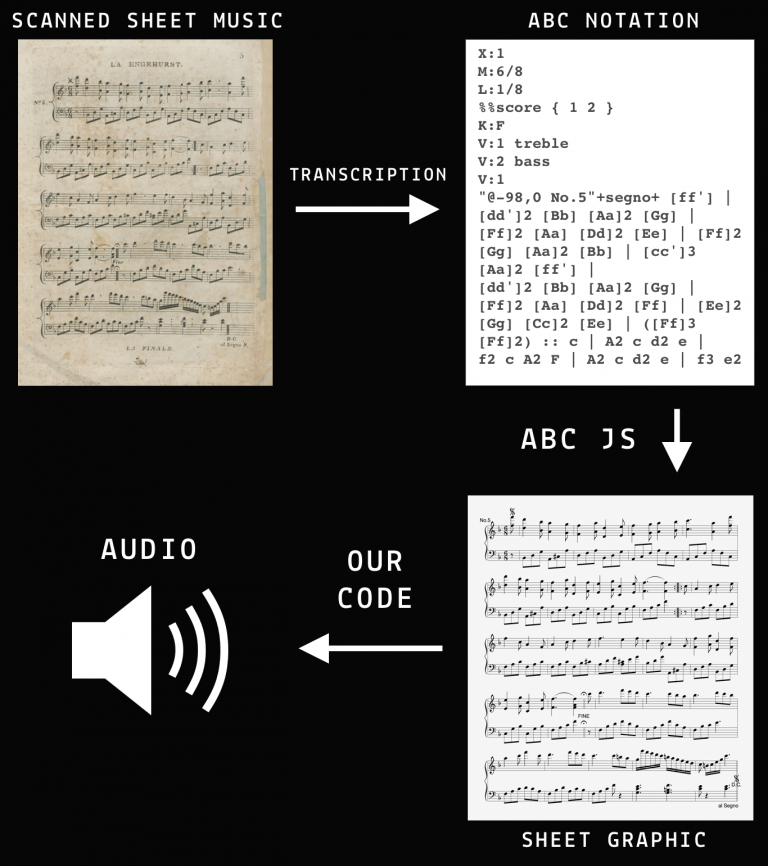

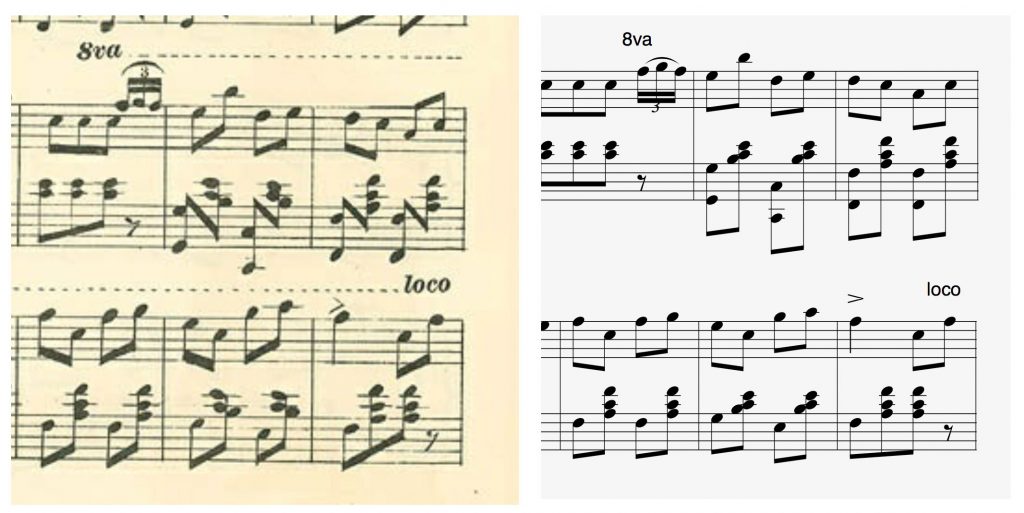

In ‘Our Sailor Prince’ in the last bar of line 4, some ‘double voicing’ occurs in the original sheet music. The piano’s treble clef (middle staff) has a chord and a single note (indicated above by the coloured marks) which are of a different length to the other notes starting at the same time. ABC notation does have a way of annotating double voicing, however our code which handles note events breaks horribly when it encounters such notation, and furthermore the architecture of how we are adding instruments to staves in Reactronica is currently expecting there to be one voice per staff.

The best approximation of how to play this without breaking (or having to vastly complicate) our playback system was to move the notes to the bass clef as seen on the right in the above diagram. In the instance of the chord they are the correct length, but the second note there is a further compromise as the chord they are added to in the bass clef is half the length. There are also visual differences around stem direction, however we could find no way to adjust stem direction of individual notes in ABCjs.

These ‘double voicing’ issues occurred in various other places in this and other songs, and similar measures were taken to get the resulting audio as close as possible to the original sheet music. One interesting side effect of this is that in some cases the adjusted notation that renders in the SVG would be impossible to play by a human, as the range of notes on the bass clef ends up being too broad for the human hand.

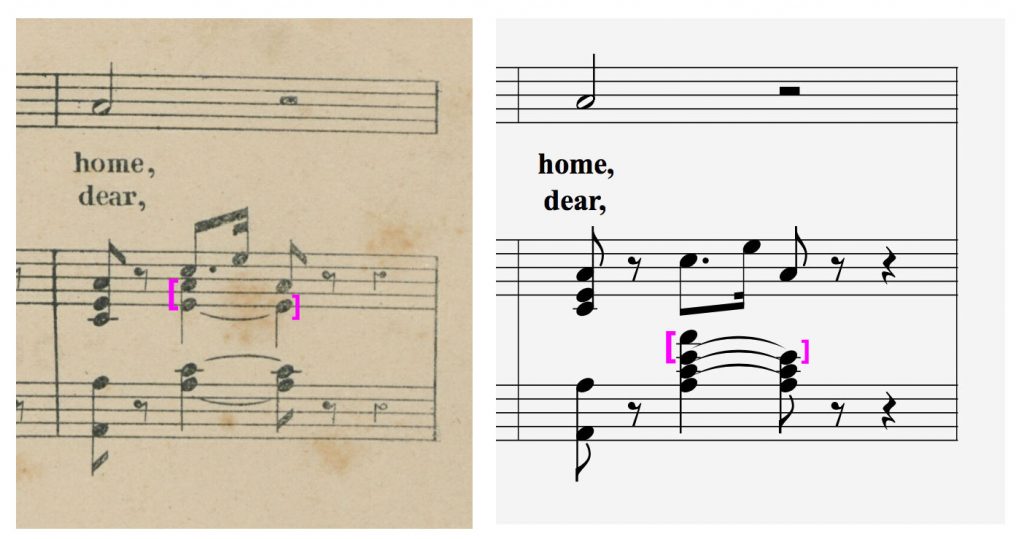

Numerous songs have the ‘8va’ marking shown above, often followed by the dotted line above the staff. This is a directive for the musician to play everything one octave higher. We managed to get the ‘8va’ itself above the staff, but only as ‘text annotation’, meaning it has no effect on the note data or music.

Currently various markings in the notation that indicate adjustments in dynamics or volume such as accents, crescendos, forte, pianissimo etc, while being correctly annotated in the ABC notation, do not have any effect on the audio. Hopefully this will be remedied when the MIDI pitches data is working (see below), as that contains ‘velocity’ information (MIDI terminology for volume). Similarly with timing adjustment such as fermata (or hold) and grace notes.

Machine transcription

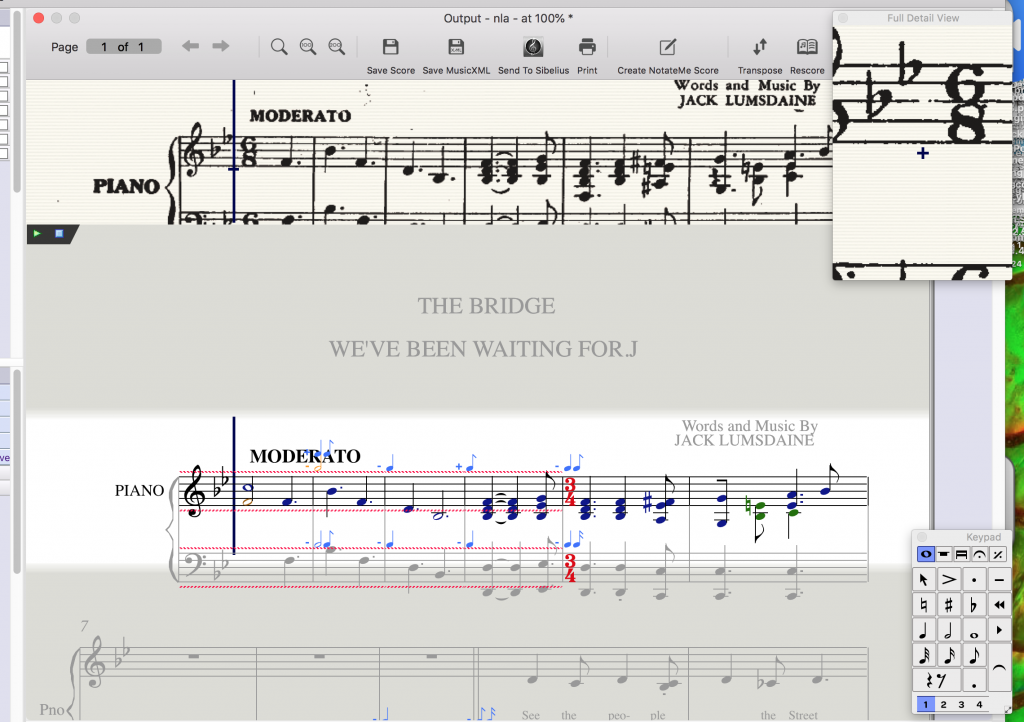

We decided to explore whether software could help speed up the transcription process. Looking around at various options PhotoScore seemed like a good option. Also it had a free trial. After loading an image in to the software, it analyses it, identifying the location of the staves, notes and so on.

As you can see above, the interface is quite complicated, so after a thorough read of the manual it became quite a speedy way of getting the sheet music into some digital form. But depending on the condition of the sheet music, it was necessary to ‘correct’ the way PhotoScore interpreted it, sometimes a little, sometimes a lot.

Comparing the scan of the original sheet music at the top of the interface to the PhotoScore interpretation lower down, we can see the key signature is correct, but the 6/8 time signature is misinterpreted as a couple of notes. Then later in the staff a random 3/4 time signature appears. The red lines let the user know the notes PhotoScore has gleaned from the score add up to either too much or too little time to fit in the bar, according to the current time signature. Other imperfections in the interpretation are evident, such as incorrect note lengths, notes missing from chords, missing or incorrect accidentals, barred-notes when they shouldn’t be and so on.

Printed vs handwritten

PhotoScore has two ‘engines’ for interpreting sheet music — one designed for printed sheet music, another for handwritten. After the misinterpretations above occurred when using the printed engine, we thought perhaps the hand-written engine would have better luck. However it was worse, which is probably fair enough given that the sheet music is generally very neat, and it seems it is just the aged condition of the items that is fooling the printed sheet music scanning engine.

So the technique of using PhotoScore necessitated a round of corrections before each page could be used. The next step was to get it into ABC notation, however PhotoScore will not output in that format. It does output in a format called MusicXML which can then be converted to ABC notation using online converters such as this one.

The ABC notation created in this way had its own issues. Occasionally PhotoScore would correctly or incorrectly annotate things with double voicing. And this would really mess up the ABC notation. So developing this pipeline of going from sheet music to PhotoScore to MusicXML to ABC notation was a learning process, with gradual improvement as we went along. It still ended up being faster than transcribing by hand.

Our code

When the user hits play, ABCjs starts outputting a packet of data for each ‘note event’ in real time. We needed to take the note info within that data packet and send it to Reactronica so audio could be generated. ABCjs has a feature whereby the note event should include something called MIDI Pitches. MIDI stands for Musical Instrument Digital Interface and is a system that was developed in the 1980s to help electronic music instruments talk to each other.

As computers started getting involved in the music sequencing, recording and making processes, MIDI also made its way into software. MIDI doesn’t contain any audio, just instructions to make it. For example a MIDI message might say ‘start playing a note at this pitch, with this volume, now, on instrument X’.

Open source reality

ABCjs is in active development and, like many open-source projects, is not completely finished. Furthermore the author is working on it in their spare time, rather than doing it as a paid, full-time job. At the time we started developing Virtuoso, the MIDI pitches section of the note event was always blank.

Consequently we wrote some rather complicated code that used other information in the note event about the positions of text snippets in the ABC notation string that the current notes are located at, and converts those snippets into the note format that Reactronica needs. This code is certainly not perfect. It has to keep track of the key signature and ensure notes are being sharped, flatted or naturaled out accordingly, adjust note length if we are in the middle of a triplet, and so much more. There are currently many shortcomings such as not dealing with double flats or double sharps.

Later in the development of Virtuoso (we paused for a bit to get The Diary Files out the door) a new beta version of ABCjs was released and it did have the MIDI pitches info! We were very excited as it meant we could do away with our incomplete code and make a much simpler converter between the MIDI info and what Reactronica uses. However our excitement was short-lived as it turned out the MIDI pitches data was not tied to the staff the notes belonged to. It was just a list of MIDI data for that moment in time. But in our songs we have 2-3 staves which can be voiced by any of several instruments. Thus we need to know which staff each of the MIDI pitches belongs to, so we can send the note to the correct instrument. We have let the author of ABCjs know this and hopefully the staff number will be added in a future release.

Musical expertise

At various points throughout this project we were lucky to be able to draw on the significant expertise of two staff members, Shari Amery and Meredith Lawn. We owe them a great debt of gratitude for their input and keen ears. Shari helped us understand what some of the more obscure things on the sheet music were called. Meredith used her in-depth knowledge of the Library’s collection to suggest a shortlist of songs to include. Later, she kindly provided a detailed list of issues, corrections and feedback for all of the songs — noticing amazingly small details, such as one note of a chord being out by a small amount (in many places!) One of the things she noticed exposed a very tricky-to-find bug in our code, which was related to key changes that happened mid-staff — originally they were only applied to the notes for one bar, rather than for the rest of the line.

Virtual orchestra

Our experts also pointed out that the clarinet samples seemed to lag behind the other sounds when used together. This was rather mysterious, but led us to discover an issue with the orchestral sample library we used (VSCO 2 library — open sourced and licensed under Creative Commons Zero).

Each instrument (piano, flute, clarinet etc) in the library has been recorded, one note at a time, across all octaves in its range. Each note is a different sound file. In the case of many instruments, rather than doing all 12 notes of every octave, there are 3-4 notes (A, C and E for example for the flute). Within Virtuoso we assign these sound recordings to a ‘sampler’ instrument in Reactronica which then pitches the closest recording up or down (if necessary) to sound the particular note being played.

Upon investigation, we discovered the clarinet recordings had anywhere up to a fifth of a second of silence at the start of each recording. This led to a process of examining all the other files, and in some cases tweaking the volumes, to get a level of consistency between the various instruments.

It should also be noted that most of these instruments can be played in different ways: staccato, sustained, with vibrato, softly, forcefully etc. And the sample library often contained various folders of sounds representing these different playing techniques. At this stage our system can only use one set of sound recordings for an instrument, so some care was taken in choosing which playing style was used for Virtuoso. Each choice is of course a trade-off and may mean it doesn’t always sounds as good as it could for the particular music being played.

Future plans

We have a few features we’d like to add in the future, such as a metronome, accompanying drum beats, more elaborate sampling techniques. We’d also love to add more songs, however the transcription process can be slow and laborious. New AI and machine learning applications may help speed this up. The Library isn’t the only organisation with sheet music, so perhaps we could partner with another institution and share resources.

The code for this project uses the same front end stack as the Library’s new collection website, so potentially it could make its way there as a custom sheet music player.

In the meantime, we have open-sourced the entire application in GitHub here: https://github.com/slnsw/dxlab-virtuoso.

Comments

I found a hacky way to notate the Sailor Prince example. It’s admittedly convoluted but it looks like the original and sounds right:

“`

T: Sailor Prince line 4 last bar

M:4/4

%%score {(RH RH2) LH}

L:1/4

K:C

V:RH

[CEA]/z/ c3/4e// A/ z/ z |

V:RH2

x E- E x & x A x2 |

V:LH clef=bass

[G,,A,]/ z/ [A,C]-[A,C]/ z/ z|

“`

Hi Paul,

Thanks so much for getting in touch and taking the time to look into how we might more accurately represent the ‘double voicing’ instances in our sheet music.

I have tested out the above and it certainly does render the notation just as it appears in the sheet music in our collection, which is great. It even plays back using our code which I was surprised by! I thought the ampersand in RH2 would really mess it up. I think our code is not playing the A though. I rewrote that line as:

x [A(E] E) x |

which ends up looking the same, and definitely plays the A.

The biggest issue we have now however, is that by splitting the treble clef into two voices within the ABC notation, we end up with a total of 3 instruments in our settings drop down (4 if the ‘voice’ top-line is added back in), which we feel would confuse the user somewhat. Given more time I’m sure we could come up with a way of specifying that certain pairs of voices are to be played by the one instrument and that would solve it.

Thanks again for your help and for your continued work on ABCjs!

-LukeD