Making NewSelfWales

Post by Kaho Cheung and Luke Dearnley.

In October 2018, as part of the Library’s gallery transformation program, the DX Lab launched #NewSelfWales, a community generated selfie exhibition. While it closed in February 2019, the exhibition can now be experienced online at dxlab.sl.nsw.gov.au/newselfwales.

This blog post documents all the technology behind this digital exhibition and website. We also have posts about the exhibition wrap up and our UX research methodology. Part of the code is publicly available on Github – https://github.com/slnsw/dxlab-newselfwales.

As DX Lab’s largest experiment ever, #NewSelfWales crosses multiple disciplines and domains, from physical, digital, design and engineering. This exhibition also provided a contrast to the other Library galleries, showcasing the diverse faces we have in New South Wales today.

This is a long post, so we have conveniently summarised it below:

- Overview

- Digital Architecture

- Image Feed

- In-gallery Selfies

- Instagram Selfies

- Collection Portraits

- WordPress Backend

- Selfie Moderation

- Frontend Apps

- Infinite Image Feed

- Website

- Projectors and Hardware

- Summary

Overview

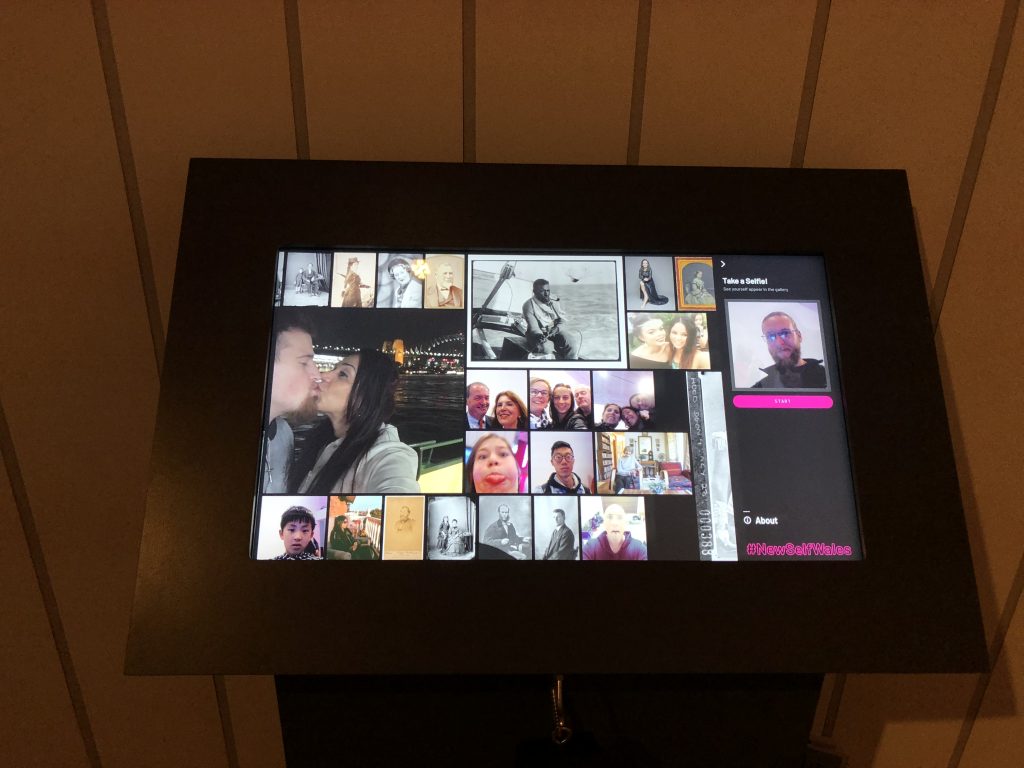

#NewSelfWales’ centre-piece is a large 8m x 2m projection of an animated image feed, populated with in-gallery selfies, Instagram selfies and collection portraits. Visitors take in-gallery selfies using the touchscreen interactive kiosks and a custom 3D printed camera. The new selfie appears moments later on the image feed, surrounded by other selfies and portraits from the Library’s collection.

This seemingly simple interaction actually relies on a network of live data, custom applications and backend services.

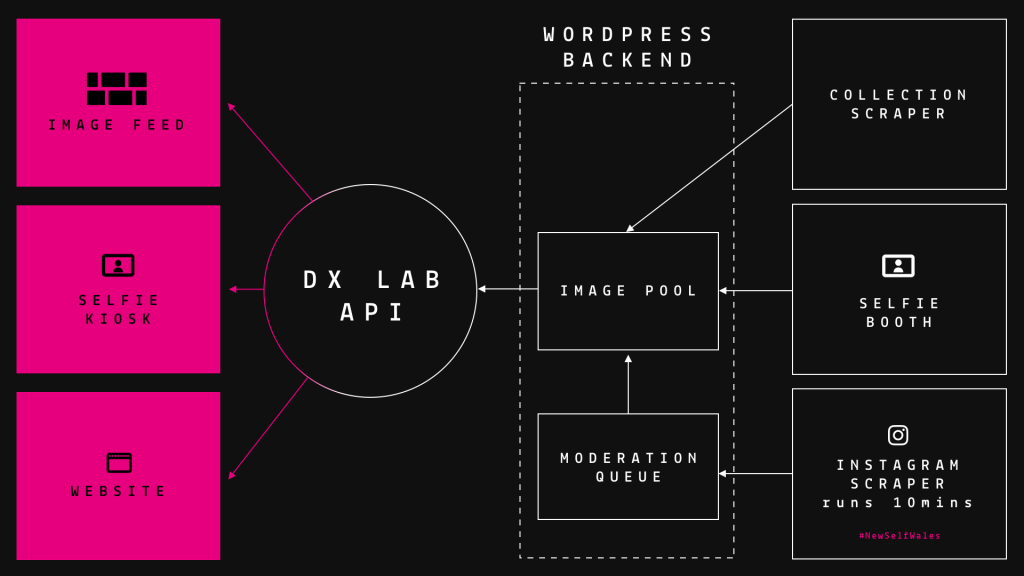

Digital Architecture

The diagram below gives you a high level view of all the moving parts.

Image Feed

The Image Feed is a good starting point as it is the most prominent part of the exhibition. As mentioned before, there are three types of images within the feed:

- In-gallery Selfies – taken by the in-gallery selfie camera (20%)

- Instagram Selfies – tagged with #NewSelfWales on Instagram (20%)

- Collection Portraits – curated from the Library’s portrait collection (60%)

The % indicates what approximate proportion that image type has in the feed. As you can see, we prioritised Collection Portraits to showcase our collection – they also tended to have the best image quality.

Beginning with in-gallery selfies, We’ll go through each image type and describe how the image ends up in our system.

In-gallery Selfies

To take these selfies, visitors use an interactive touchscreen selfie kiosk and custom 3D printed camera. These cameras are shaped like large phones in order to mimic an authentic ‘selfie’ experience.

Inside the camera case is a USB HD webcam that sends video to our application. When the user presses the physical button on the camera, our application captures the image and sends it to our backend (WordPress in our case).

The button is connected to a small circuitboard which emulates a keyboard, so the application merely has to listen for a specific keypress to take a still image from the webcam. We chose an arcade style button as they have a long history of surviving brutal pummelling from the public.

Once the selfie has been taken, it appears on the image feed.

Instagram Selfies

As part of the Library’s marketing campaign for the new galleries, we asked users to add the hashtag #NewSelfWales to their ‘grams’ if they wanted their selfie to be shown on the image feed and collected by the Library.

To ‘harvest’ these selfies, we first investigated Instagram’s public API. Unfortunately it had no ability to search by hashtag and was also being deprecated. Luckily we discovered the Instagram website had a unique URL for every hashtag, in our case: https://www.instagram.com/explore/tags/newselfwales/

Getting the selfie data turned out to be easier than expected. Rather than needing to parse plain HTML, a giant blob of structured data (in JSON format) was readily accessible. We wrote a harvester to capture this information, running every ten minutes and updating our backend whenever a new selfie appeared.

Collection Portraits

The Library has a rich collection of historical and modern portraits, readily available on the Library’s online collection. Using an experimental collection API, we wrote a harvester that searched for the term ‘portrait’ from our subject headings and then extracted the images and metadata. This harvester could also pull in items by object number, so specially selected items, or lists of items could be targeted. This took a few days to run and around 30,000 items came in, which was painstakingly culled down to around 5,500.

Similar to In-gallery and Instagram Selfies, the collection harvester uploads data and images to our backend ‘image pool’ in WordPress.

WordPress Backend

Rather than building a backend from scratch, we leveraged WordPress’s admin system and used it to store images and metadata. We created Custom Post Types for the three sources – portraits, gallery selfies and Instagram selfies. Our frontend applications use React, so they communicate with WordPress via the REST API. The DX Lab website uses a similar microservice architecture.

Selfie Moderation

Like other community generated projects, moderation is a key issue. After much discussion, we decided on post-moderating in-gallery selfies and pre-moderating Instagram selfies.

Post-Moderation (In-gallery Selfies)

For in-gallery selfies, post-moderation gives the user (almost) instant gratification of seeing their selfie appear in the exhibition while they are still in the space. Given the very public nature of the kiosk area, being well lit and monitored by security cameras, we considered the risk of misdeeds to be quite low.

The actual post-moderation part is handled in the WordPress admin. In-gallery selfies are saved as Approved/Published, we periodically check and reject the odd unwanted image. Most have been removed due to being blurry or pictures of the roof rather than anything malicious. In the worst case it would have been very quick and easy to switch the kiosk code over to a pre-moderation model.

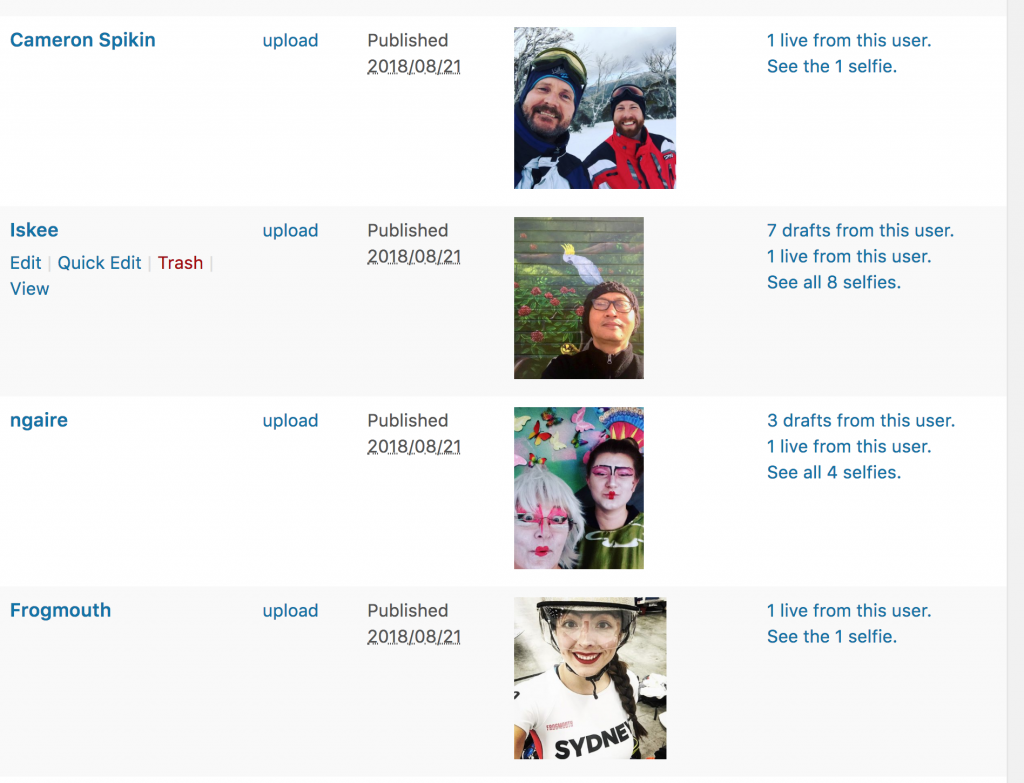

Pre-Moderation (Instagram Selfies)

In contrast, Instagram selfies were pre-moderated. We set the scraper to upload the new Instagram selfies as unpublished (i.e. draft) and manually approved them in batches at various spare moments throughout the day. We didn’t have to worry about nudity or violent imagery because Instagram was great at removing dubious content before we got it. The manual approval process was mainly to reject images that were not technically selfies and avoid any one user sending too many similar selfies.

Rejected selfies were put in WordPress’ trash, so it was necessary for the scraper to check this when adding new Instagram selfies. We also disabled emptying the trash, to stop rejected images coming back in to the moderation queue.

It was great to see the enthusiasm that several users have had for the project, some adding dozens and dozens of images, but we needed to be mindful of the balance of faces displayed. We didn’t want one person appearing too often, even if in different shots. Of course given the long timeframe over which we were collecting content it became difficult for moderators to remember how many selfies had previously been approved from a particular user. So we wrote some code to help us.

This code added links to the admin area when viewing the Instagram content. It showed how many other selfies have been ingested from the same Instagram user, both as published and draft statuses. Clicking these links shows these subsets allowing easy decision making around what should be included and what is essentially duplicate content. Some users for example weren’t simply taking lots of shots of themselves, but rather each member of their family in turn, so there were plenty of situations where we ended up allowing many images from one user.

Frontend Apps

All up, we built three frontend applications:

- Image Feed – 8m x 2m projection, Chrome Kiosk Mode

- Selfie Kiosk – Chrome Kiosk Mode

- Website – online at https://dxlab.sl.nsw.gov.au/newselfwales

While these apps were deployed in different contexts, we were able to build them all using web technologies. This enabled us to share code between apps – in fact, they all run off the same website. This makes deployments and code updates very convenient.

We used React JS, a popular Javascript UI library, to build shareable components that snapped together like Lego for each app. For example, all three apps use the ImageFeed component that displays the scrolling images. The selfie kiosk and website use the ImageModal component to show individual images and info.

Communication with the backend architecture and WordPress is done through the DX Lab’s Global API (built using GraphQL – more on that in a future post).

Infinite Image Feed

Building an image feed that scrolls forever, with live images coming from two sources, and across three projectors proved to be quite the challenge. To top it off, the feed also had to be device responsive, usable on our selfie kiosk, desktop computers and mobile phones.

Masonry Tiles

To convey the scale and variety of our data, we purposefully engineered the image feed to feel organic and densely populated. We considered cropping images to visually organise the feed, however we abandoned this approach due to the potential for heads and bodies to be cut off.

Rather than writing our own masonry style library, we used an open source project called Packery – it has a clever algorithm that helps pack as many as images as possible into the available space. Packery also has some elegant built-in animations that run whenever new images are added, or old images are removed.

Scrolling

After some experimentation, we set the image feed to scroll at 0.5 pixels per second. This seemed like a good balance between keeping visitors engaged and avoiding motion sickness. We also decided on scrolling the images from right to left, as this would be the natural scrolling direction when using it on the touchscreen interactive.

With the feed moving constantly in one direction, we had to continually fetch more images from our API to fill in the empty space. This fetch occurs every 30 seconds or so, providing us an opportunity to display any new in-gallery selfies that were taken since the last fetch.

On the other side of the feed we had to handle the opposite problem. Although images disappeared once they scrolled past the left edge, they still took up memory in the application. After a few hundred images, we reached the memory limit and caused the browser to crash.

We had to experiment a few times to figure out the best solution. The masonry layout of the feed complicated matters further, so rather than fighting the masonry layout, we decided to leverage it.

Looking at the animation above, notice how the images on the left repack themselves, triggering a chain reaction of image animations towards the right. This is caused by removing off-screen images from the left of the screen. Due to Packery’s algorithm, images would move to the left, filling in the empty space off-screen. This was quite jarring, as images would suddenly fly offscreen.

To fix this, we did some image position calculations, reduced the empty left gap and moved the whole image feed to the right – all in a microsecond so it was imperceptible to the eye. The Packery library would then adjust itself and re-position the images, triggering the elegant animations from left to right.

This is demonstrated below, with the pink line showing what happens to offscreen images.

Healthchecks

To monitor uptime for the gallery apps, we used a service called https://healthchecks.io/. We configured Healthchecks to expect some data from our apps every 2 minutes, if no message was received, Healthcheck sent an email, warning us of any potential issues.

Website

Because all our gallery applications were built with web technologies, it was relatively easy to re-use the same code and build a #NewSelfWales experience online.

In addition to the Image Feed, we added a new search feature to the website. This allows users to search through the whole #NewSelfWales dataset, from 7,267 in-gallery selfies, 919 Instagram selfies and 5,426 collection portraits.

To enhance the search experience, we added the ability to filter by image type. We also added curated search suggestions that highlighted some of the fantastic content in the Library’s collection, eg. Max Dupain, Architect and Newtown.

Fetching vs Subscriptions

As previously mentioned, the Image Feed periodically fetches our API for new images to ensure there is no empty space while scrolling. This was going to be problematic once we launched the website publicly – we weren’t confident our API could handle the load, especially if there were multiple people on the website and fetching several times a minute. Caching would not be possible because every API call needed some random images to keep the feed fresh and alive.

The solution came from another DX Lab gallery project called O.R.B.I.T (launched at the same time as #NewSelfWales! Essentially a visualisation of our Readers’ real-time use of the catalogue – more to come in another blog post). Displayed in the Circulation Gallery, this two screen experience uses the websockets protocol to enable two-way communication between a dashboard app and projection from another computer.

Instead of fetching, the new Image Feed subscribes to a server, waiting for updates. A small service triggers the subscription feed with new images every 20 seconds. Anyone viewing the website receives those updates immediately, with the added advantage of seeing the same images as everyone else, making it a truly global feed.

Projectors and Hardware

The main in-gallery display was created by three projectors suspended from a purpose-built truss. These were connected via HDMI to a tower PC concealed in the wall containing a ‘beefy’ graphics card. The projectors were configured as a single large (5760px by 1200px) screen using the graphics card’s ‘mosaic mode’ with Chrome displaying the Image Feed in full-screened kiosk mode.

A lot of pain and effort was put into working out exactly where and how to locate the projectors. The Lab already had these projectors, unfortunately the limitations of their lens throw made it tricky to work out the ideal arrangement. Furthermore, the fixing and location of the truss was limited by both structural and heritage concerns, adding yet more restrictions on the set up. Expert consultants were engaged and did a great job of designing, installing and configuring the system.

Summary

Exhibition Outcomes

At the beginning of this project we were unsure whether we would get more selfies via Instagram or in-gallery. As the numbers above reveal it was heavily skewed toward in-gallery contributions. On average 52 gallery selfies were taken per day compared with around 4 a day for Instagram.

In all 2,639 unique hashtags were used with the Instagram selfies, with the top twenty being shown here with their counts in parentheses (note #NewSelfWales is not shown as that was of course with every image we collected)

#sydney (89)

#selfie (55)

#australia (45)

#portrait (40)

#nsw (25)

#photography (25)

#statelibrarynsw (24)

#family (20)

#portraitphotography (18)

#love (17)

#life (15)

#artist (15)

#art (14)

#selfportrait (14)

#picoftheday (14)

#sydneylocal (14)

#goulburn (14)

#sffsimonselfie (12)

#portraits (12)

#travel (12)

We have written more on the outcomes of the exhibition in this blog post.

Collecting Data

A major part of this experiment was to ‘collect the face of New South Wales’ as contributed by the public. We will archive the website, keep the images in WordPress and have an item level description in the Library’s catalogue of the overall experience.

Hardware Issues

All in all things ran very smoothly considering the over 7000 images were taken using the selfie kiosks, however the odd hardware issue did arise. One of the two in-gallery cameras was taking blurry images because the webcam had been glued slightly too close to the 3D printed housing. This stopped the autofocus from working, but luckily we had a spare.

Next, one of the arcade buttons stopped triggering the selfie capture. A cable had come loose inside the camera housing, nothing a bit of gaffer tape couldn’t fix. Later one of the cameras kept going ‘blank’ which could be fixed by unplugging it and plugging it back in. A more permanent fix turned up when we looked inside the enclosure to discover one circuit board had come loose and was shorting the camera when moved about by visitors. Gaffer tape to the rescue again.

Digital Resilience

While a camera failing would not be the end of the world, ensuring the smooth running of the 3 projector image feed was critical. We had multiple contingencies if anything went wrong. As the image feed was a web application, we had a spare server and database on hand if anything went down. The PC running the image feed was connected by ethernet, so internet connectivity was assured. To ensure that we could sleep well at night, we screen captured the whole feed for 10 minutes and saved it onto the PC in case of emergency.

Thanks

Before launch, this project was billed as DX Lab’s largest experiment ever – it certainly lived up to that claim. We couldn’t have done it without collaborating with several key Library teams. Many thanks to Exhibitions and Design, Media and Communications, Research and Discovery and ICT Services.

We were thrilled that #NewSelfWales was a finalist for a Museums and the Web GLAMIi award in the Exhibition Media or Experience: Non-Immersive category.

Comments

Great post!

Very interesting to read about the whole development process. Really nice work by all involved.

Thank you Ben. We really appreciate the feedback.