80Hz: part 2. Sound and tech

This post is the second of three which describe the results of Thomas Wing-Evans‘ research at the DX Lab, the outcome of which is the 80Hz Sound Lab interactive sound pavilion installed recently on the Library’s forecourt. In part two we interview Julian Wessels from Sonar Sound who talks about the process of creating the audio. We then explore how the technology inside the installation works. If you missed it, part one is here.

DX Lab: “We need audio interpretations of forty four paintings” is a pretty strange brief. How did you initially plan to tackle this?

Julian: It’s an amazing brief! As a sound designer it’s the brief you dream of. We started by researching if anyone else had done something like this, and while some people had produced similar works it didn’t seem like anyone had done this specifically and that’s where it got really exciting. So with that blank slate we thought about what experience we wanted the viewers to encounter. We didn’t want it to be too abstract so we set about creating a set of rules that could filter the metadata into a cohesive work.

DX Lab: You ended up creating a custom-made instrument in software, which the paintings ‘play’ to some degree to create the soundscapes. Can you tell us about the technologies your instrument is comprised of and how you decided on using them?

Julian: We needed something bespoke and very flexible so we created the program in Max. This allowed us to play and experiment with different approaches until we settled on a system that worked. Central to the application was a set of tools for Max by VJ Manzo that allowed us to build our program within a very musical framework.

DX Lab: Can you explain how the image itself and the related metadata influence various aspects of each soundscape and how you decided on those relationships?

Julian: It was a process of analysing the metadata and looking for patterns or similarities that could inform different aspects of the composition. One of the biggest challenges we faced was giving the metadata some sort of structure. It’s all very well to assign a set of data to a set of parameters but that all needs to occupy a space in time. We decided to treat each painting as its own sequencer. This meant that we could divide the paintings into a grid based on physical dimensions, small paintings were split into a 4×4 grid, medium sized paintings into an 8×8 grid and large paintings into a 16×16 grid with the predominant colour within that region assigned to each square. This gave a set of block colours that could each be assigned to a chord within a given musical scale. We took this further and assigned complexity (number of distinct colours detected) to inform how these chords where voiced, less distinct colours mean that the chords where restricted and played closer together while the more distinct colours that were detected the wider and more complex the chords became.

The melody generator that plays over the top of the chords has a few key pieces of metadata that informs how it operates. The intensity of the image informs the speed of the melody, high values generate notes rapidly while medium and low values generate notes at half and quarter speed respectively. Whether or not there is a face in the image determines if the melody is random or ‘intelligent’. No face detected means that the random melody generator plays any note within the scale over any chord that is currently playing. When a face is detected the intelligent generator takes into account the current chord and weights the random melody generator to preference certain notes that are more familiar or pleasing within that chord.

DX Lab: What techniques did you employ to ensure the entire set of soundscapes had enough variety in the sound?

Julian: One thing we wanted was a sense of distinction when moving between images and one piece of data that seemed to give us enough variation was category. All of the images fell into 10 categories that related to 10 instruments that we created for the installation; these instruments are all various piano sounds that have been played, processed or tortured in some way to create 10 unique instruments. Similar processes were applied to each instrument, There are five pianos, 10 chordal instruments, 10 melody instruments and four bass instruments to choose from, giving us a possible 80,000 combinations purely based on different combinations of those instruments.

DX Lab: There were six speakers inside the 80Hz Sound Lab, three spread along the span of the ceiling, one on the floor at the opposite end to the door and two subs under the floor. How did you make use of this speaker set up?

Julian: The 3 speakers above you carried a wide mix of the compositions as well as an ambient layer based on the location of the painting. The speaker on the floor also had location-based sound FX. The root note of the chord is doubled and lowered an octave to send to the subs under the floor. This speaker set up surrounded you with sound and gave a sense of immersion.

DX Lab: Was there a tension between how much control you wanted to have in how the soundscapes should sound and letting the picture and the metadata create the sound?

Julian: We tried to be as hands off as possible which is easier said than done, but once we had a set of rules and filter that we felt happy with we just applied the same logic to all 40 paintings, then stood back and let it create itself. We really wanted to let the paintings speak for themselves so this was a really crucial step and I think that the results are surprisingly beautiful. It’s been an interesting journey because no matter how judicious you are with your process you can’t avoid infusing meaning in some way. I’ve been watching some people use it and say things like “this one sounds sad because the picture is sad” or “this one is really dark because the picture is dark” but in reality none of those things are accounted for in our compositions. Even though this has been a data driven process as humans we are constantly searching for meaning and it’s been really interesting to step back and see how people interpret the sounds for themselves.

There is no predefined way to translate paintings into sound, so I think what we’ve created is an environment for each of these paintings to occupy a sonic space within that environment that the viewer has to navigate. While you may not immediately see a correlation between the painting and its accompanying soundscape we hope that by exploring the different soundscapes the viewers can discover their similarities by their relationship to each other.

DX Lab: I understand there is a second software instrument used to play back the compositions inside the structure itself. Can you tell us about that, and how the transitions between soundscapes work, as visitors turn the handle?

Julian: This program was set up to create smooth transitions between the compositions when the visitor turned the handle, but there were some gems hidden in these transitions. As the viewer moved off the image the current soundscape filtered out and a series of effects were applied to deteriorate the sound as the next image faded in and this is the realm where it gets a bit interesting. The program was set up in such a way that you couldn’t stray too far off the chosen path but there was also space to learn the subtle nuances of the device and ‘play’ it like an instrument. We wanted to add this layer as a reward to encourage exploration.

How it worked: By Luke Dearnley (DX Lab developer).

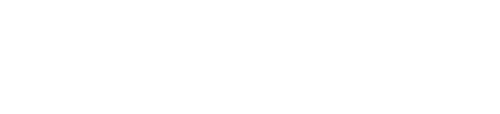

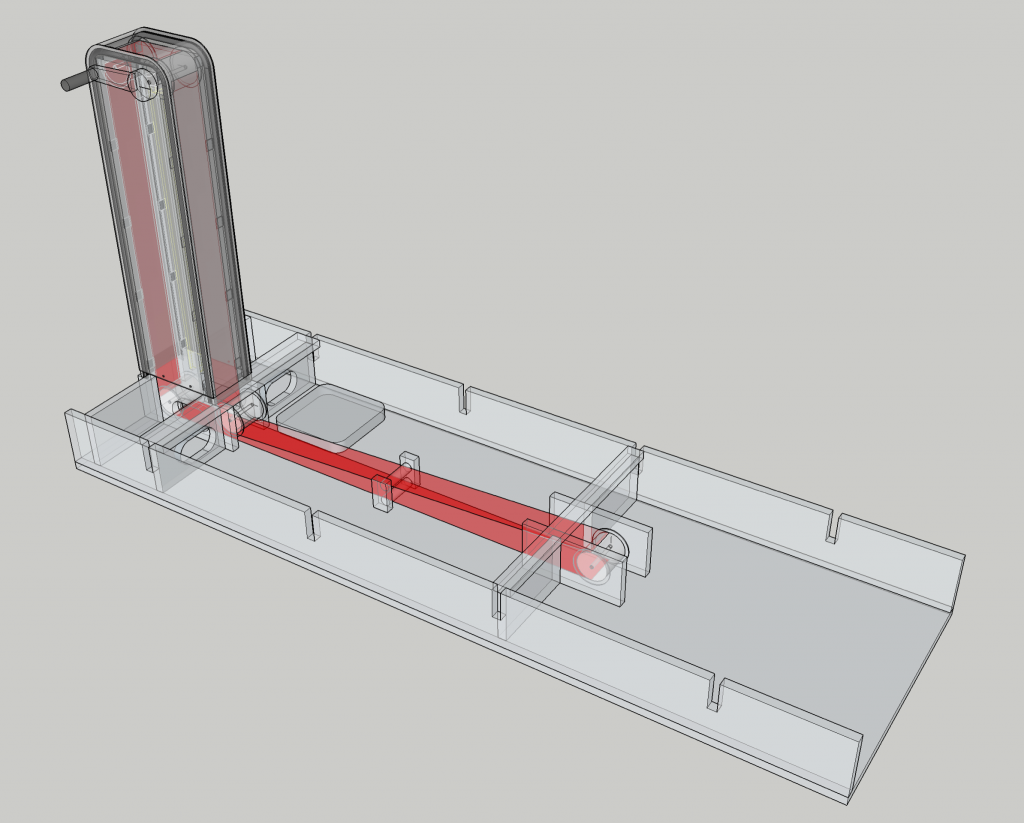

In order for the computer in the Sound Lab to play the correct sound for the image being shown atop the central column, it needed to know the position of the belt to which the images were affixed. My original idea was to have a series of unique symbols or glyphs on the underside of the belt and a camera looking up at them. I was planning to use Max/MSP to analyse the image from the camera and determine which glyph was in view. Before I got too far down that road I realised a far simpler and more reliable solution was to use RFID tags. So the position of the belt was determined by scanning the RFID tags stuck along its length. There were nearly 300 of them stuck side-by-side along one edge of the belt (one every 18mm).

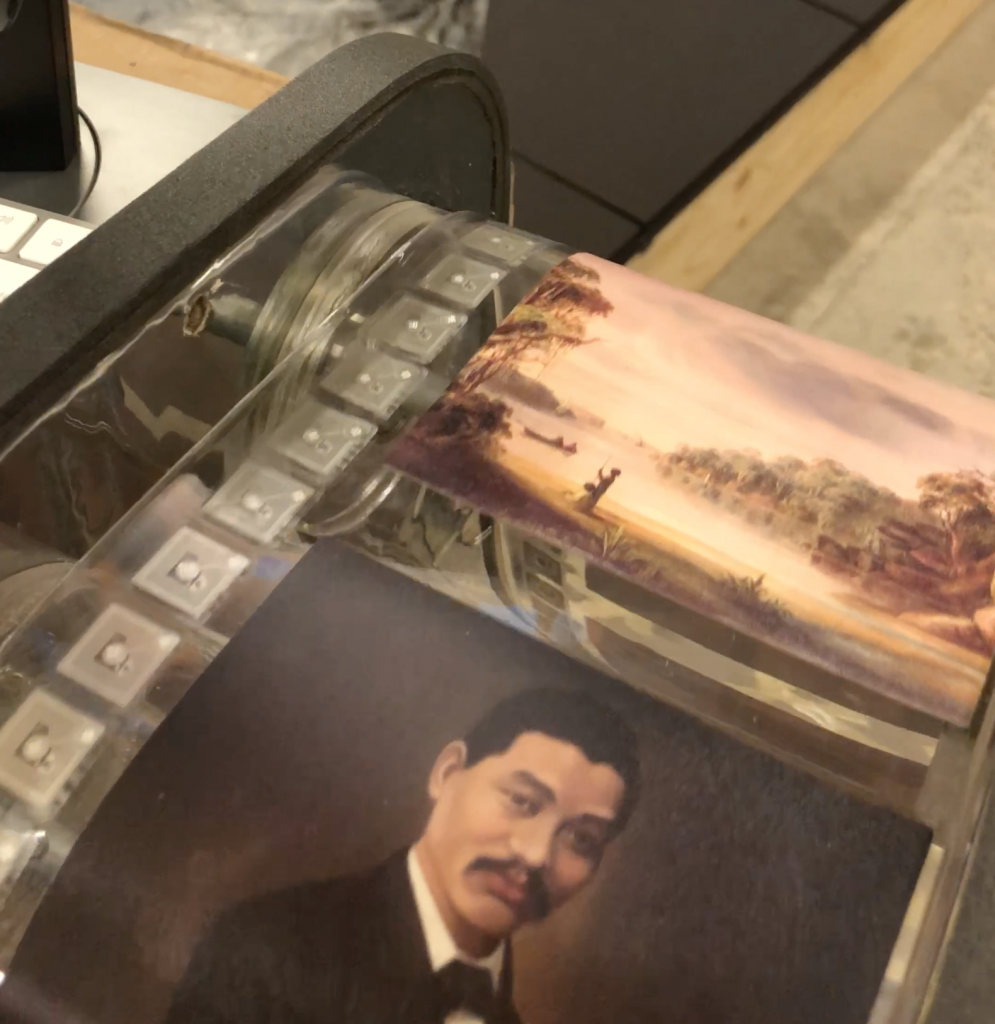

The RFID reader board was attached to an Arduino Uno micro-controller, which in turn was connected via USB to the Mac Mini running Max/MSP. The code for these was adapted from Luke Woodbury’s work here:

The Max/MSP patch which took the RFID tag ID from the Arduino was absorbed into the main Max/MSP patch from Sonar which played back the audio. As the sensor was actually twice the width of the tags, about half the time it repeatedly read two adjacent tag IDs in turn. In order to stop this ‘jittering’ between values I realised I could double them and report the average between the current value and the previous one. This ended up giving us an integer between 0 and 564 which worked out as a 9mm accuracy in the belt position tracking, or 14 values per image.

Julian’s patch used these integers to work out which image was currently in view, and thus which soundscape should be heard. The 14 different values for one image let the patch know whether the image was completely in view, or whether the visitor was moving to the next or previous image, and how far along that transition they were. This information was used by the Max patch to ‘filter out’ the soundscape for the image we were moving away from, while also ‘filtering in’ the soundscape for the image moving forward.

There was a second Arduino Uno which was taking information from Max/MSP and using it to control the internal lights in the structure. The lights were simply several metres of warm white 12v LED strip, wrapped helically around a length of broom handle, before having a frosted perspex tube slipped over them.

The Max/MSP patch periodically converted the instantaneous volume of the audio it is creating and sent it via USB to the second Arduino which in turn adjusted the pulse-width of a square wave driving a MOSFET which was throttling the flow of power from a 12v DC high-current supply to LED strips inside the lights. Thus the brightness of the lights appeared to reflect the volume of the sound being generated, creating a more immersive experience.

The smaller structure was a seat which projected images of the paintings on the ground in front of whoever was seated at it, while the corresponding soundscape for that image was played via a pair of speakers above. After a period of time, the image slides across and a new image comes into view while the soundscape changes to be the one for the new image. After all 44 images had been shown, the content looped.

Since the content for this was simply a video file with stereo audio we found the easiest way to realise the set up was using a BrightSign player connected to a video projector and a pair of active speakers similar to those in the larger structure.

Read about the design development and fabrication process in the third and final post.